Evaluation is one of the points of concern in the “test process improvement” (TPI) model [Koomen, 1999]. The TPI book

contains some suggestions for implementing and improving the evaluation process (section 7.19 “Evaluation”).

Evaluation form

The evaluators detect many defects; often it is decided to enter these in a defect administration. At the end of the

evaluation meeting, rubrics such as status, severity, and action have to be updated for the defects. These are labour

and time-intensive activities that can be minimised as follows:

-

Evaluators record their comments on an evaluation form.

-

These comments are discussed during the evaluation meeting.

-

At the end of the meeting, only the most important comments are registered in the defect administration as a

defect. Please refer to Defects Management, for more information on the defect procedure.

An evaluation form contains the following aspects:

Per form

-

Identification of evaluator

-

Identification of the intermediate product

-

document name

-

version number/date

-

Evaluation process data

-

number of pages evaluated

-

evaluation time invested

-

General impression of the intermediate product

Per comment

-

unique reference number

-

Clear reference to the place in the intermediate product to which the comment relates (e.g. by specifying the

chapter, section, page, line, requirement number)

-

Description of the comment

-

Importance of the comment (e.g. high, medium, low)

-

Follow-up actions (to be filled out by the author with e.g. completed, partially completed, not completed)

Note: This form is useful for reviewing documents in particular. When reviewing software or for a walkthrough of a

prototype, the aspects on the form need to be modified.

Evaluation technique

When using an evaluation technique, practice has shown that there are several critical success factors:

-

The author must be released from other activities to participate in the evaluation process and process the results.

-

Authors must not be held accountable for the evaluation results.

-

Evaluators must have attended a (short) training in the specific evaluation technique.

-

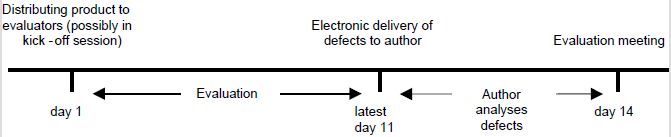

Adequate (preparation) time must be available between submission of the products to the evaluators and the

evaluation meeting (e.g. two weeks). If necessary, the products can be made available during a kick-off. See figure

1 below for a possible planning of the evaluation programme.

-

The minutes secretary must be experienced and adequately instructed. Making minutes of all defects, actions,

decisions and recommendations of the evaluators is vital to the success of an inspection process. Sometimes the

author takes on the role of minutes secretary, but the disadvantage is that the author may miss parts of the

discussion (because he must divide his attention between writing and listening).

-

The size of the intermediary product to be evaluated and the available preparation and meeting time must be tuned

to each other.

-

Make clear follow-up agreements. Agree when and in which version of the intermediary product the agreed changes

must be implemented.

-

Feedback from the author to the evaluators (appreciation for their contribution).

Figure 1: Possible planning of an evaluation programme

The planning above does not keep account of any activities to be executed after the evaluation meeting. These might

involve: modifying the product, re-evaluation, and final acceptance of the product. If relevant, such activities must

be added to the planning. The activities ‘modifying the product’ and ‘re-evaluation’ may be iterative.

Practical example of evaluation

In an organisation in which the time-to-market of various modifications to the information system had to be short, the

modifications were implemented by means of a large number of short-term increments.

The testers were expected to review the designs (in the form of use cases). A lead time of two weeks for a review

programme was not a realistic option. To solve the problem, the Monday was chosen as the fixed review day. The ‘rules

of the game’ were as follows:

-

The designers delivered one or more documents for review to the test manager before 9.00am (if no documents were

delivered, no review occurred on that Monday).

-

All documents taken together could not exceed a total of 30 A4-sized pages.

-

The test manager determined which tester had to review what.

-

The review comments of the testers had to be returned to the relevant designer before 12.00pm.

-

The review meeting was held from 2.00pm to 3.00pm.

-

The aim was for the designer to modify the document the same day (depending on the severity of the comments, one

day later was allowed).

Perspective-based reading

Participants in an evaluation activity often assess a product with the same objective and from the same

perspective. Often, a systematic approach during preparation is missing. The risk is that the various participants find

the same type of defects. Using a good reading technique can result in improvement. One technique commonly used is the

perspective-based reading (PBR) technique. Properties of PBR are:

-

Participants evaluate a product from one specific perspective (e.g. as developer, tester, user, project manager).

-

Approach based on the what and how questions. It is laid down in a procedure what the product parts are that must

be evaluated from one specific perspective and how they must be evaluated. Often a procedure (scenario) is created

for each perspective.

PBR is one of the ‘scenario-based reading’ (SBR) techniques. Defect reading, scope reading, use-based reading and

horizontal/vertical reading are other SBR techniques that participants in an evaluation activity can use. Please refer

to e.g. [Laitenberger, 1995] and [Basili, 1997] for more information.

|